Some of you might like the following article I put together last week:

securityconscious.

You should not be using IE, in general, but because of this New Internet Explorer vulnerability affecting all versions of IE if you do, now you have yet another reason to switch to Firefox + NoScript and if you are paranoid enough: Firefox + No Script + Request Policy :). Just switching to another browser without security extesions won’t really cut it because of this: Expanding the Attack Surface.

Feedback and/or contributions to make this better are appreciated and welcome

Highlighted quotes of the week:

“A slogan for Information Security: “The more you know, the less you trust.” – Dino A. Dai Zovi

“Dear web developer, exporting personal data of Irish citizens outside EU is not OK, it is against the law Data Protection Act” – Brian Honan

“Sandboxes are like WAFs – either they get bypassed or people just get pwned through stuff the sandbox/WAF allows anyway” – Stefan Esser

“With Sandboxing in play one needs at least 3 exploits, not two. 1 memcorruption + 1 infoleak + 1 sandbox escape.” – Stefan Esser

“Ok so out of 16000 passwords on scrollwars 3000 people have ones which couldnt be cracked in less than 30 mins. Not to good.” – Martin Bos

“So lemme get this straight. Assange leaked 2k + cablegates but now he is pissed that someone leaked the file on his rape case?” – Martin Bos

“Dear reporters: Quantum Crypto has real problems with unexpected attacker controlled input. Raw photons riskier than packets!” – Dan Kaminski

To view the full security news for this week please click Click to explore (Divided in categories, There is a category index at the top): The categories this week include (please click to go directly to what you care about): Hacking Incidents / Cybercrime, Unpatched Vulnerabilities, Software Updates, Business Case for Security, Web Technologies, Network Security, Cloud Security, Privacy, Mobile Security, Cryptography / Encryption, General, Tools, Funny

Highlighted news items of the week (No categories):

Not patched: IIS 7.5 0-Day DoS (processing FTP requests), New Internet Explorer vulnerability affecting all versions of IE

Updated/Patched: MySQL 5.5 released, Microsoft withdraws flawed Outlook update, Microsoft releases Security Essentials 2, Opera 11.00 has been released!, Google updates Chrome Beta & Dev channels, Secunia releases PSI version 2.0, Back door in HP network storage solution – Update, Oracle Unveils Oracle VM VirtualBox 4.0, When a smart card can root your computer (OpenSC patches)

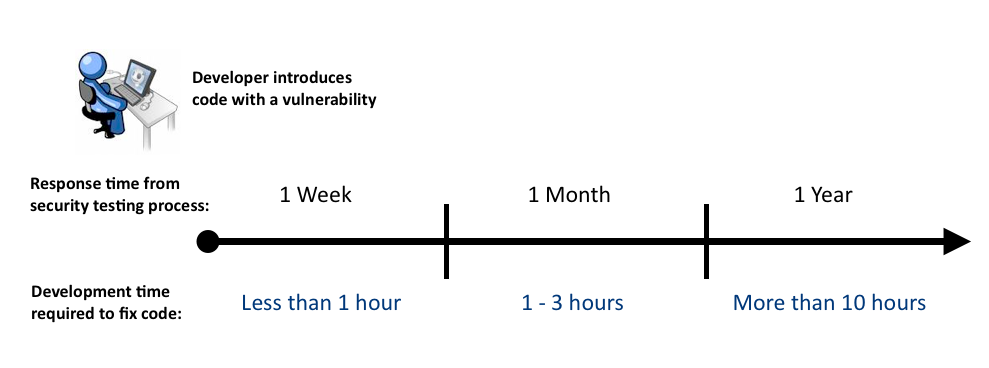

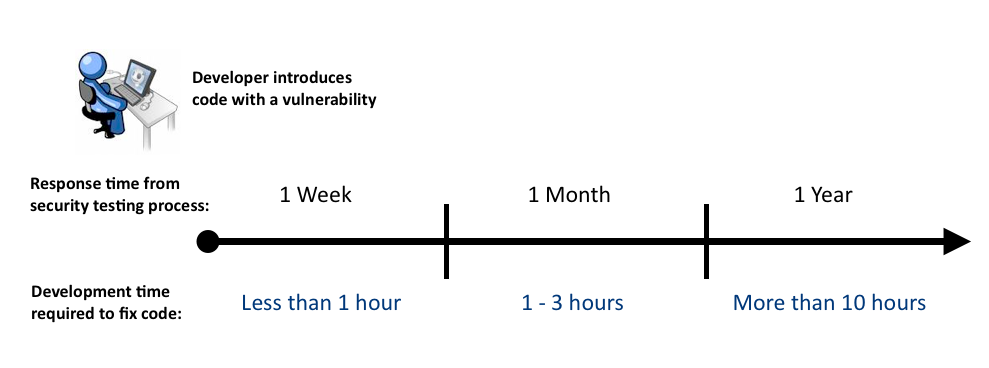

The length of time between when a developer writes a vulnerable piece of code and when the issue is reported by a software security testing process is vitally important. The more time in between, the more effort the development group must expend to fix the code. Therefore the speed and frequency of the testing process whether going with dynamic scanning, binary analysis, pen-testing, static analysis, line-by-line source code review, etc. matters a great deal.

2010 has been notable for a number of reasons, the advent of a coalition government in the UK, followed by swinging spending cuts, and a turbulent economic picture. In a regulatory sense, too, 2010 stands out: increasingly active regulators have increased fines for organisations which are found to have failed to comply with basic levels of protection around data. A new record was set with the £17.5 million FSA fine on Goldman Sachs. Moreover, the emphasis has broadened – rather than the ICO and FSA focusing solely on the financial sector. Both Hertfordshire County Council and A4E were the subject of fines for weak controls around personal data. At the same time, other regulation continues to apply – many organisations are struggling with PCI-DSS compliance, not only in the commercial sector, but also in the state sector, where cards are important for covering payments for basic services.

We recently met with leaders from the U.S. financial services sector, and they asked a number of questions about recent trends in insider threat activities. We are often asked these types of questions, and we can answer many of them right away. Others require more extensive data mining in our case database. In this entry, we address the following question:

Between current employees, former employees, and contractors,

is one group most likely to commit these crimes?

The answer to this question has some important implications, and not just for these particular meeting attendees. If, across all types of incidents and all sectors, the vast majority of incidents are caused by current, full-time employees, organizations may focus on that group to address the vulnerability. If, on the other hand, there are a large number of part-time contractors or former employees, there may be different controls that an organization should consider using.

Debora Plunkett, head of the NSA’s Information Assurance Directorate, has confirmed what many security experts suspected to be true: no computer network can be considered completely and utterly impenetrable – not even that of the NSA.

‘There’s no such thing as ‘secure’ any more,’ she said to the attendees of a cyber security forum sponsored by the Atlantic and Government Executive media organizations, and confirmed that the NSA works under the assumption that various parts of their systems have already been compromised, and is adjusting its actions accordingly.

Cloud Security highlights of the week

The Cloud Security Alliance’s matrix is a controls framework that gives a detailed understanding of security concepts and principles that are aligned to the CSA’s 13 domains

The Cloud Security Alliance (CSA) has launched a revision of the Cloud Controls Matrix (CCM). The new matrix (version 1.1), available for free download here, is designed to provide fundamental security principles to guide cloud vendors and help prospective cloud customers assess the overall security risk of a cloud provider.

The matrix provides a controls framework that gives a detailed understanding of security concepts and principles that are aligned to the CSA’s 13 domains. The foundations of the CCM rest on its customized relationship to other industry-accepted security standards, regulations, and controls frameworks such as ISO 27001/27002, ISACA COBIT, PCI, and NIST. The latest version includes more thorough mapping around NIST and GAAP, as part of more ‘holistic guidance’, according to CSA.

The cloud is certainly going to change some things about malware infection. When a desktop is reset to clean state every time an employee logs in, you now have to wonder how malicious attackers are going to maintain persistent access to the Enterprise. This is similar to what happens when an infected computer is re-imaged only to end-up infected all over again.

There are several ways to maintain persistent access without having an executable-in-waiting on the filesystem. Memory-only based injection is an old concept. It has the advantage of defeating disk-based security. One common observation is that such malware doesn’t survive reboot. That is true in the sense that the malware is not a service or a driver – but this doesn’t mean the malware will go away. Stated differently, the malware can still be persistent even without a registry key to survive reboot. This applies to the problem of re-infection after re-imaging (a serious and expensive problem today in the Enterprise) and it also applies to the future of cloud computing (where desktop reset is considered a way to combat malware persistence).

Secure Network Administration highlights of the week (please remember this and more news related to this category can be found here: Network Security):

Imagine there is an un-patched Internet Explorer vuln in the wild. While the vendor scrambles to dev/test/QA and prime the release for hundreds of millions of users (I’ve been there… it takes time), some organizations may choose to adjust their defensive posture by suggesting things like, “Use an alternate browser until a patch is made available”.

So, your users happily use FireFox for browsing the Internet, thinking they are safe from any IE 0dayz… after all IE vulnerabilities only affect IE right? Unfortunately, the situation isn’t that simple. In some cases, it is possible to control seemingly unrelated applications on the user’s machine through the browser

Ever tested some of the more exotic transport protocols?

SCTP is interesting … multihoming means you can have several ips involved on each side of a connection (association in sctp speak) … so when you move from wired to wireless your ssh session still is fine. If you find a proper SCTP ssh, of course.

Testing it on Ubuntu LTS, though, using socat for glue… a listening SCTP socket is invisible in netstat -ln. Fun. tcp, udp, raw sockets are visible … but sctp isn’t.

socat SCTP-LISTEN:8080,fork TCP-CONNECT:localhost:22

Nice, stealthy backdoor. Does not show in netstat(8) or ss(8). Combine with socat TCP-LISTEN:2223 SCTP-CONNECT:localhost:8080 on a remote host and we have a completely stealthy tunnel, if the firewall is mildly clue-challenged.

Recently I’ve been presenting about ‘Wi-Fi (In)Security’ on the GOVCERT.NL Symposium 2010 in Rotterdam (November 2010) and (a reduced version) on the 4th CCN-CERT meeting in Madrid (in Spanish; December 2010). The full presentation can be found on Taddong’s lab web page. My main goal was to create awareness about all the still prevalent Wi-Fi vulnerabilities, threats, and security risks we are facing both on the wireless infrastructure and the client side. It is almost year 2011, and there is a general feeling that our Wi-Fi environments are pretty secure, as we already have WPA2-Enterprise with multiple authentication methods based on 802.1x/EAP to choose from. However, still there are lots of things to be aware of, specially on the client side (including laptops and mobile devices).

On the infrastructure side, in the best case scenario, we will end up with two worlds, the secure one, based on WPA2-PSK/Enterprise, and the insecure one, based on open Wi-Fi networks (e.g. hotspots) . This is also reflected on the Wi-Fi Alliance roadmap, and it is their goal for 2014 (yes, 3 years from now!).

As a follow-up to my post on the USB Stick O’ Death, I wanted to go a little more in depth on the subject of AV evasion. Following my release of (some of) my code for obfuscating my payload, it became apparent that researchers at various antivirus companies read my blog (Oh hai derr researchers! Great to have you with us! I can haz job?) and updated their virus definitions to detect my malicious payload. To be perfectly honest, I was hoping this would happen, as I figured it would be a teachable moment on just how ineffective current approaches to virus detection can be, give readers a real world look at how AV responds to new threats, and provide one of the possible approaches an attacker would take to evading AV software. My main goal in this research was to see how much effort it would take to become undetectable again, and the answer was ‘virtually none’.

In this post, I will first look at how I was able to evade detection by many AV products simply by using a different compiler and by stripping debugging symbols. Then, I will look at how I was able to defeat Microsoft’s (and many other AV products’) detection mechanisms simply by ‘waiting out’ the timeout period of their simulations of my program’s execution. However, a quick note before we begin: I’m by no means an expert on antivirus, as this exercise was partly to further my understanding of how AV works, and these explanations and techniques are based on my admittedly poor understandings of the technologies behind them. If I mistakenly claim something that isn’t true, or you can shed light on some areas that I neglect, please comment. I would love to learn from you.

The purpose of this article is to explore the many different forensic artifacts that can be discovered from Windows prefetch files. The first section will briefly cover the prefetch file and the prefetching process. The second section, will discuss the forensic values of the prefetch file, specifically the forensic artifacts the prefetch file contains, and the story that can be revealed by the mere existence or absence of prefetch files. The article will conclude with some examples of how you can use prefetch files to aid in forensic analysis and what to watch out for when using prefetch files to prove or disprove a case.

The main purpose of this article is to explain the use of prefetching in forensic analysis, but it is important to have a baseline understanding of the technology to provide a good foundation for how and why prefetch files contain certain artifacts. The prefetching process utilized by Microsoft was created to speed up the Windows operating system and application startup. The prefetching process occurs when the operating system, specifically the Windows Cache Manager, monitors certain elements of data that are extracted from the disk into memory. This monitoring occurs each time the system is started for the first two minutes of the boot process, then sixty seconds after all the Win32 services have completed their startup, and the first ten seconds after an application is executed.

Secure Development highlights of the week (please remember this and more news related to this category can be found here: Web Technologies):

Unencrypted public access wireless networks are an unbelievably harmful technology devised with no regard for the operation of the modern web – and they introduce far more problems than immediately apparent. The continued use unencrypted wifi on municipal level and in consumer-oriented settings is simply inexcusable, even if all the major websites on the Internet can be pressured into employing HTTPS-only access and Strict Transport Security by default.

Straightforward snooping and cute tricks such as sslstrip aside – all of them still deadly effective, by the way – there are many less obvious problems we simply can’t solve any time soon:

I was playing around with window.history object. In general, it’s quite limited and can be considered rather useless. However, HTML5 brings some new methods to History object in order to make it more powerful.

In this article I will take a quick glance on a quite peculiar method called pushState(). There is one security related issue I want to point out, which I’m considering rather harmful.

history.pushState()

history.pushState() was introduced in HTML5 and it’s meant for modifying history entries.

By using pushState() we’re allowed to alter the visible URL in address bar without reloading the document itself. Sounds a bit risky, doesn’t it?

Once you have deployed ModSecurity, you have probably been faced with this question:

How should I configure my Web Application Firewall (WAF) to handle Authorized Vulnerability Scanning (AVS) traffic?

The answer to this question is not quite as easy as it may first appear. This question arises when organizations are running their own internal web application vulnerability scans. They soon realize that they need to figure out how to get their security tools (scanner and waf) to ‘play nice’ with each other.

Before deciding on how to reconfigure ModSecurity with regards to handling the scanning traffic, you first must confirm the goal of your scanning efforts. There are usually two main scanning goals:

* To identify all vulnerabilities within a target web application, or

* To identify all vulnerabilities within a target web application that are remotely exploitable by an external attacker.

You may want to reread the seconed item to make sure that you understand the difference, as it is factoring in the exploitability of a vulnerability in a production web application.

Cracking hashes in the JavaScript cloud with Ravan

Password cracking and JavaScript are very rarely mentioned in the same sentence. JavaScript is a bad choice for the job due to two primary reasons – it cannot run continuously for long periods without freezing the browser and it is way slower than native code.

HTML5 takes care of the first problem with WebWorkers, now any website can start a background JavaScript thread that can run continuously without causing stability issues for the browser. That is one hurdle passed.

The second issue of speed is becoming less relevant with each passing day as the speed of JavaScript engines is increasing at a greater rate than the increase of system speed. It might surprise most people how fast JavaScript actually is, 100,000 MD5 hashes/sec on a i5 machine (Opera). Thats the best number I could get from my system, in most cases it would vary between 50,000 – 100,000 MD5 hashes/sec. This is still about 100-115 times slower than native code on the same machine but that’s alright. What JavaScript lacks in outright speed can be more than made up for by its ability to distribute.

It boggles my mind that this is apparently a big question for techies and, to me, is a perfect example of the Silicon Valley mindset that doesn’t understand how to build products that real people want to use.

The short answer is that OpenID is the worst possible ‘solution’ I have ever seen in my entire life to a problem that most people don’t really have. That’s what’s ‘wrong’ with it.

To answer the most immediate question of ‘isn’t having to register and log into many sites a big problem that everyone has?,’ I will say this: No, it’s not. Regular normal people have a number of solutions to this problem. Here’s some of them:

Google or other search engines have been used for many purposes such as finding useful information, important websites and latest news on different topics, Google index a huge number of web pages that are growing daily. From the security prospective these indexed pages may contain different sensitive information.

Google hack involves using advance operators in the Google search engine to locate specific strings of text within search results. Some of the more popular examples are finding specific versions of vulnerable Web applications.

Finally, I leave you with the secure development featured article of the week courtesy of OWASP (Development Guide Series):

Identify interconnections in the Applications environment

-Identify all the interconnections to the application such as through a corporate intranet, the internet, business partner connection, and associated access controls.

- administrative interfaces or portals separate from normal user application access

- web service access from other application or over business partner connections

- Database connections from this application as well as other connections from other applications if the database is shared

-Additionally access to other applications needs to be considered if you plan to redirect a user to another application (internal or external) or use an iframe to present content from another site into your application. All these communications should be considered for how the application will be deployed so that the proper secure access can be built into the design. This includes the proper ingress and egress ports to be opened externally, to business partners of MPLS or IPSec VPNs, or internal to your own network.

-Least privilege should be exercised at every step in the design and deployment phase. All of the components of the application should be identified including all the devices that will be supporting the application.

Source: Follow this link

Have a great week, weekend and Merry Christmas!.