REMINDER: We just released OWTF 1.0 "Lionheart", Please try it and give us feedback!

NOTE: This blog post is a guestpost by Tao 'depierre' Sauvage, who authored one of the most successful GSoC 2014 projects for OWASP OWTF this year: OWASP OWTF: Automated Rankings

But before GSoC began, the user had to manually evaluate the security risks for each plugin. If you had an assessment concerning 30 different websites it would take a lot of times. Therefore, the need showed up by itself: OWTF needed to pre-evaluate the security risks of its plugins.

The second rule is more interesting. We, OWASP contributors, decided that only the user is able to correctly estimate a critical risk because they are the only one aware of all parameters, such as the application context.

Highlight of the Critical ranking

Difference between an automated ranking and a confirmed one

The three last rules have been chosen according to OWTF's philosophy, which is to have interactive reports. Therefore the automated ranking system would have its rankings more transparent than the ones of the user. That way, a quick view of the report will allow them to see what has been found and who found it.

Even though 103 seems a big number, a lot of plugins still has to be supported. The automated ranking system is in its early-stage development and will keep growing time after time in order to support each and every OWTF's plugins.

If you made it this far, don't miss out Tao 'depierre' Sauvage's personal blog here!

NOTE: This blog post is a guestpost by Tao 'depierre' Sauvage, who authored one of the most successful GSoC 2014 projects for OWASP OWTF this year: OWASP OWTF: Automated Rankings

Helicopter view:

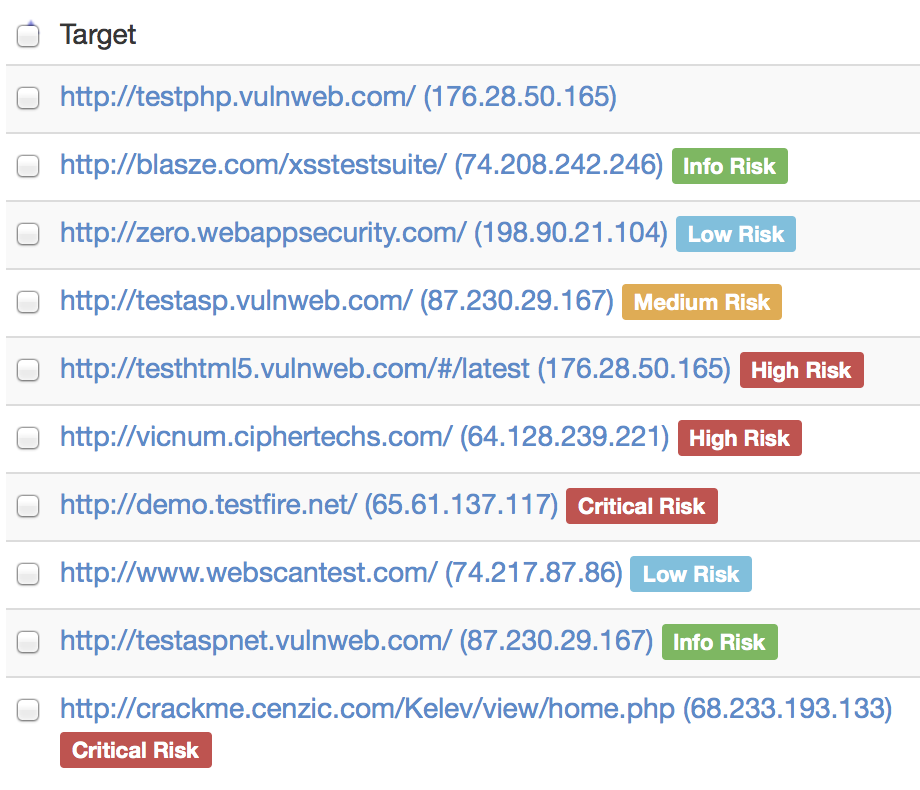

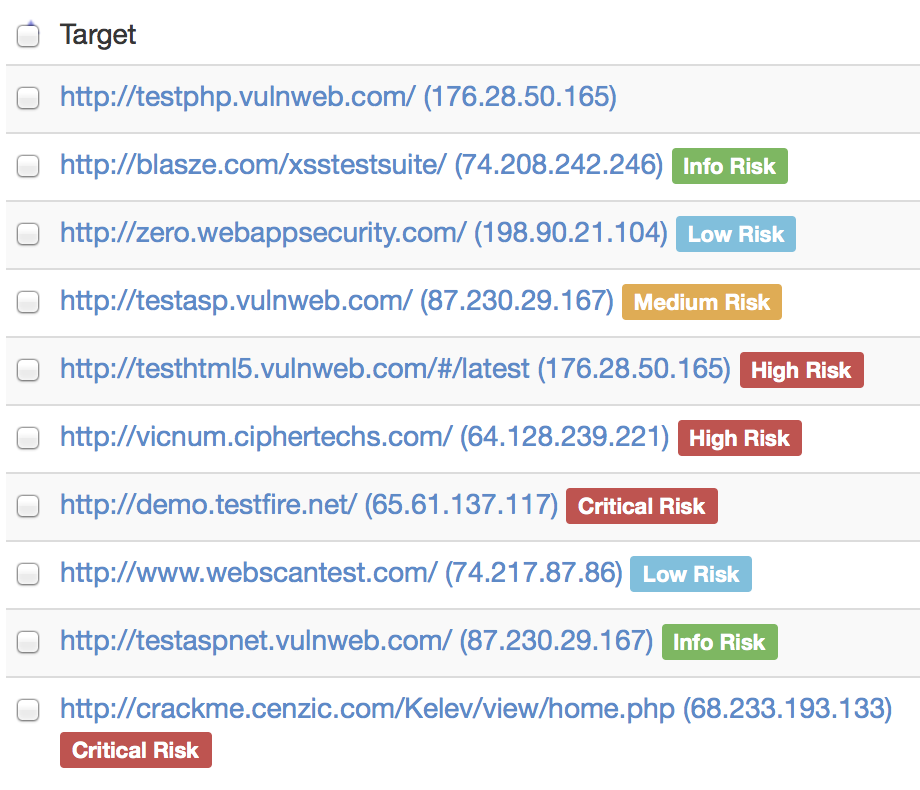

Ever had to test 30 URLs in 5 days and wondered where to start? OWTF will now take the MAX severity from ALL tools run against EACH target and tell you where 🙂

And with that, a big THANK YOU and welcome to Tao! 🙂

Introduction

Thanks to GSoC, I had the opportunity to work on the OWASP - OWTF project. My task consisted in implementing an automated ranking system but first of all, let us have a quick overview of OWTF.

As you surely know, because you are reading this blog, OWTF is a framework that helps user -- might be a security expert as well as an unsavvy but curious person -- in security assessments. It will take care of the unpleasant part of the job and automatically generates an interactive report containing all the information for the selected plugins.

The powerful feature here is the interactive report. In a few words, instead of having a report that cannot be modified (like Skipfish or W3AF for instance), OWTF will take into account the user's actions. For instance, it might be interesting to add a screenshot for the plugin XY to clearly show the SQL injection that was found, and, you can!

As you surely know, because you are reading this blog, OWTF is a framework that helps user -- might be a security expert as well as an unsavvy but curious person -- in security assessments. It will take care of the unpleasant part of the job and automatically generates an interactive report containing all the information for the selected plugins.

The powerful feature here is the interactive report. In a few words, instead of having a report that cannot be modified (like Skipfish or W3AF for instance), OWTF will take into account the user's actions. For instance, it might be interesting to add a screenshot for the plugin XY to clearly show the SQL injection that was found, and, you can!

But before GSoC began, the user had to manually evaluate the security risks for each plugin. If you had an assessment concerning 30 different websites it would take a lot of times. Therefore, the need showed up by itself: OWTF needed to pre-evaluate the security risks of its plugins.

The ranking system

By the end of GSoC, the automated ranking system has been completed and integrated to OWTF, a good news for its users.

It has been developed following the simple rules below:

1. OWTF's ranking scale would be Unranked/No risk, Informational, Low , Medium, High and Critical risk (6 different values).

2. OWTF cannot automatically rank the outputs higher than high.

3. The automated rankings will be highlighted as such.

4. The user will be able to confirm/override the ranking.

5. If the ranking has been confirmed/overridden, the highlight is removed.

It has been developed following the simple rules below:

1. OWTF's ranking scale would be Unranked/No risk, Informational, Low , Medium, High and Critical risk (6 different values).

2. OWTF cannot automatically rank the outputs higher than high.

3. The automated rankings will be highlighted as such.

4. The user will be able to confirm/override the ranking.

5. If the ranking has been confirmed/overridden, the highlight is removed.

The first rule is kind of obvious, it is based on the most common scales that are used by any security tools.

6 available rankings

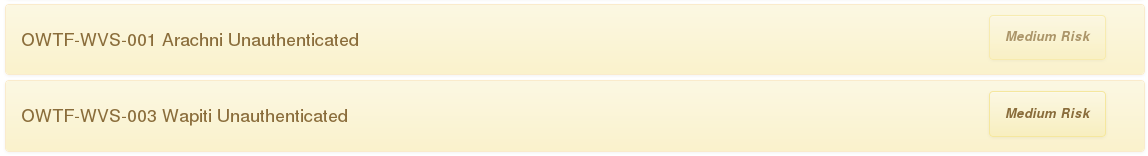

The second rule is more interesting. We, OWASP contributors, decided that only the user is able to correctly estimate a critical risk because they are the only one aware of all parameters, such as the application context.

Highlight of the Critical ranking

Let's say that tool XY found an SQL injection on target AB. According to most of vulnerability scores, such discovery is estimated really hazardous. On the other hand, OWTF cannot know what the database contains and if its information is critical or not. Therefore, instead of yelling the critical big red flag, it ranks the discovery as high and let the user decide whether it deserve a higher or lower ranking.

Difference between an automated ranking and a confirmed one

The three last rules have been chosen according to OWTF's philosophy, which is to have interactive reports. Therefore the automated ranking system would have its rankings more transparent than the ones of the user. That way, a quick view of the report will allow them to see what has been found and who found it.

Example of OWTF with its new automated ranking system

As a visual result, OWTF’s automated ranking system will save a lot of time for the users. On the report above, a fraction of second is needed to understand what security aspect should be reviewed first.

Current development state

At the time of writing, OWTF's automated ranking system supports a couple of plugins and can rank at most 103 different ones. The following table describes which plugins are ranked:

|

Supported tools

|

Number of possible corresponding plugins

|

|

Arachni

|

1 plugin (OWTF-WVS-001)

|

|

DirBuster

|

12 plugins

|

|

Metasploit

|

85 plugins

|

|

OWASP

|

1 plugin (OWTF-CM-008)

|

|

robots.txt

|

1 plugin (OWTF-IG-001)

|

|

Skipfish

|

1 plugin (OWTF-WVS-006)

|

|

W3AF

|

1 plugin (OWTF-WVS-004)

|

|

Wapiti

|

1 plugin (OWTF-WVS-003)

|

Supported plugins that can be ranked by OWTF

Even though 103 seems a big number, a lot of plugins still has to be supported. The automated ranking system is in its early-stage development and will keep growing time after time in order to support each and every OWTF's plugins.

Reusable

During the development phase, I decided to export the automated ranking system in a standalone library that I baptized ptp (Pentester's Tools Parser). It means that OWTF's ranking system is reusable by anyone and to be honest, it is quite easy to embed ptp in your own project.

If you are curious about developing a similar tool as OWTF but you don't want to bother ranking the discoveries, then have a look at ptp's documentation (linked in the resources section).

If you are curious about developing a similar tool as OWTF but you don't want to bother ranking the discoveries, then have a look at ptp's documentation (linked in the resources section).

Resources

Before I let you go back to your activities, here are some useful links that would give you more information on the topic:

- github: OWTF's github repo

- github: ptp's github repository.

- owtf-github: ptp's documentation.

- python: ptp's PyPI package.

If you made it this far, don't miss out Tao 'depierre' Sauvage's personal blog here!